Plugins make life easier—they’re reusable, composable pieces of functionality that extend and adapt your CI/CD workflows. You can easily integrate additional features, tools, and custom scripts to really improve productivity and efficiency. There are a wide array of plugins available in our plugins directory, or you can even create your own.

Being modular by design means you can “combine” multiple plugins to carry out a series of actions in a single command step—which is awesome! But…it’s an age-old fact that as soon as dependencies are introduced, things can get complex. Often you’ll have one plugin depending on the results from another plugin, which means plugins have to be defined together and in a specific order to achieve the desired result.

This blog seeks to answer one of the more common questions we hear in support requests.

Question: Why aren’t environment variables set in X plugin available to a Docker container?

Answer: Because environment variables aren’t automatically available to Docker containers.

Sharing environment variables between plugins

Multiple plugins that need to share environment variables should be defined within a single plugins step. Each individual step will run on a different agent, meaning environment variables set for a plugin in one step won’t be accessible to a plugin defined in a different plugins step.

This pipeline uses the aws-assume-role-with-web-identity plugin. It exports the AWS credentials so the assumed role can be used in the step.

steps:

- label: ":rocket: list AWS env vars"

command: env | grep AWS

plugins:

- aws-assume-role-with-web-identity:

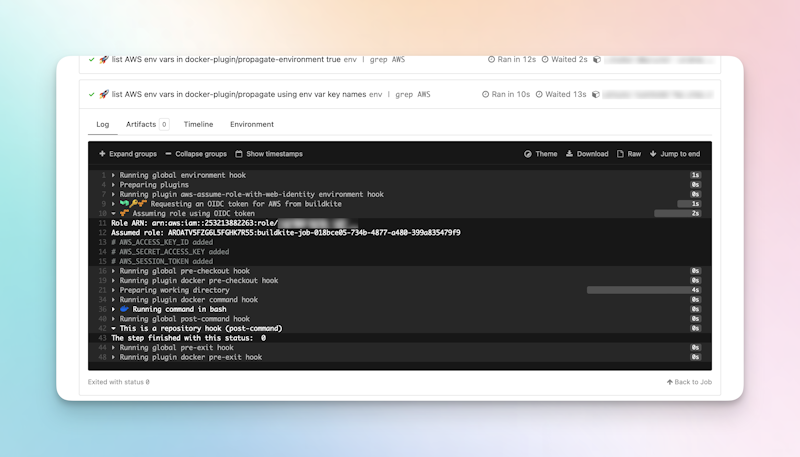

role-arn: arn:aws:iam::AWS-ACCOUNT-ID:role/SOME-ROLEThe logs show the AWS credentials have been exported.

The logs show that the AWS role has been assumed.

If we use another plugin in the same command step as this plugin, we would assume the exported AWS role credentials would be readily available. However, even if plugins are defined and run together in the same step, there are some sneaky gotchas that snag a lot of users when first using multiple plugins with Docker.

Exposing environment variables to plugins in Docker

The following example uses the aws-assume-role-with-web-identity plugin, along with the docker plugin.

steps:

- label: ":face_with_monocle: list all env vars"

command: env

plugins:

- aws-assume-role-with-web-identity:

role-arn:arn:aws:iam::AWS-ACCOUNT-ID:role/SOME-ROLE

- docker#v5.7.0:

image: bashWhen listing the environment variables that are available to the docker plugin by default, we’d expect to see something, because we’ve already validated that we’ve correctly assumed the AWS role necessary to do this.

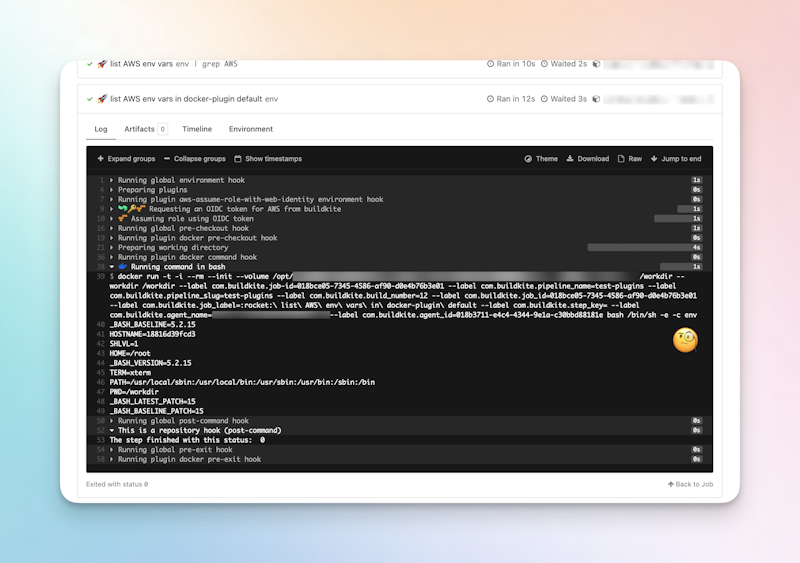

The logs however, show the environment variables were not accessible from the Docker container’s execution environment.

We don't see any environment variables in the logs.

A Docker container does not have access to the environment on its host, as it runs as its own instance. This includes any custom plugins that run in Docker and require access to environment variables. In the following example, our do-something-with-aws plugin will need access to the AWS auth credentials to authenticate the auth-role configuration.

steps:

- label: ":face_with_monocle: show user identity"

command: bash -c 'aws sts get-caller-identity'

plugins:

- aws-assume-role-with-web-identity:

role-arn: arn:aws:iam::AWS-ACCOUNT-ID:role/SOME-ROLE

- gh-user/do-something-with-aws:

auth-role:SOME-ROLEThe AWS auth credentials environment variables need to be explicitly defined in the do-something-with-aws plugin. You can do this by passing them in using the -e or --env flag, and the variable name when running the Docker container in the custom plugin’s script.

docker run -e AWS_SESSION_TOKEN -e AWS_ACCESS_KEY_ID -e AWS_SECRET_ACCESS_KEY .... /

....The plugin will then have access to the environment variables it needs to do its thing in AWS.

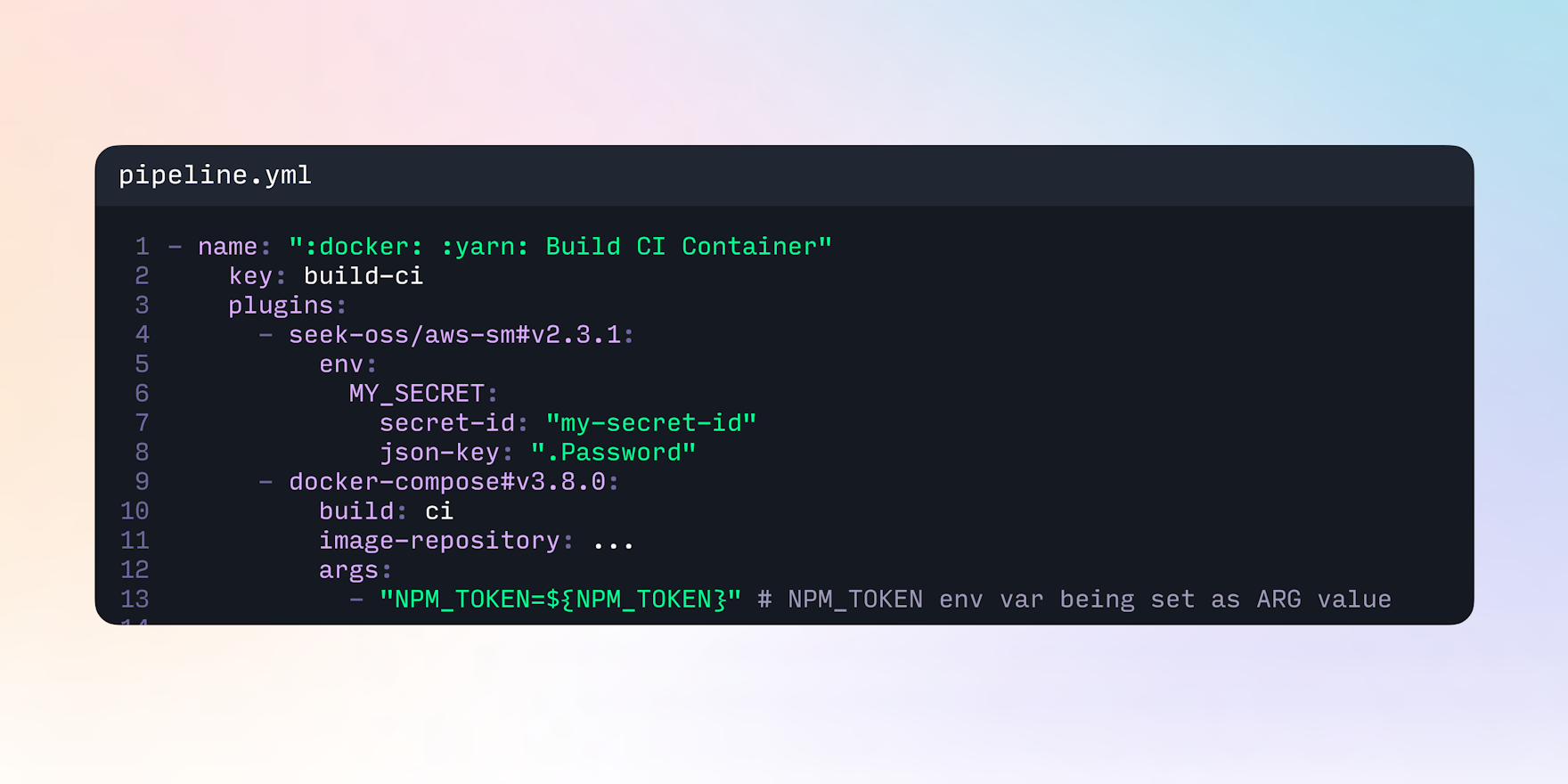

Exposing environment variables as argument variables for Docker Compose

Another common question that crops up is:

How do I expose an environment variable’s value to the

docker-composeplugin when building a Docker image?

The Docker Compose plugin is one of our most popular plugins, so if you’re using Docker and Buildkite you’ll be reaching for this plugin—it lets you build, run, and push build steps using Docker Compose. Before getting started, it’s important to be aware that a build’s environment variables won’t automatically propagate as environment variables to the Docker image built using the docker-compose plugin.

By default, Docker Compose creates whatever environment variables are available for interpolation from the docker-compose.yml file, but doesn't automatically pass them into containers. Docker uses argument variables—also known as build-time variables. When building an image, these are “announced” in the Dockerfile with the ARG instruction. To pass environment variables in as the image is built, the docker-compose plugin uses the args configuration.

In the following .buildkite/pipeline.yml, the ${BUILDKITE_BUILD_AUTHOR} environment variable is set as the IMAGE_AUTHOR argument variable in the args configuration.

- name: ":docker: Build CI Container"

key: build-ci

plugins:

- docker-compose#v4.15.0: null

build: app

args:

- IMAGE_AUTHOR=${BUILDKITE_BUILD_AUTHOR}The issue in this scenario is that the IMAGE_AUTHOR variable will only be available in the build image, but not on the future containers built from this image.

To pass environment variable values from the build image, use the ARG variable to set the ENV variable, as shown in the following snippet from a Dockerfile:

# expect a build-time variable

ARG IMAGE_AUTHOR

# set the default value

ENV IMAGE_AUTHOR=$IMAGE_AUTHORThis way, unless overridden, the default value is available to any container started from the image.

Conclusion

These are some of the most common complexities to be aware of when sharing environment variables between plugins and Docker in Buildkite.

Plugins become incredibly useful once you've mastered composing them with the right environment variables exposed and shared. You're able to:

- Abstract core pieces of functionality from your users.

- Centrally manage configurations that can be included in as many pipelines as you need.

- Do those things in AWS.

Or, do almost anything you can imagine. Plugins unlock a lot of power in customizing your Buildkite pipelines.